Imperfect designs and inconvenient physics.

Imperfect designs and inconvenient physics.

Microphones, loudspeakers and the human ear all work by a similar mechanism. Understanding the mechanics of these devices is an important part of sculpting a great live mix. This article outlines these concepts, and explains how to exploit the opportunities that these sound/electrical interfaces offer, and also how to avoid the problems that can arise because of their many limitations.

Even the most brilliantly engineered audio equipment exhibits predictable behaviour that can limit its effectiveness for live sound reinforcement. These behaviours can require difficult decisions when attempting to emphasize the desirable qualities in a mix, while efficiently exploiting the strengths of the sound system. Every minor change to a mix can require further adjustments because trade-offs are necessarily made along the way.

Sculpting an overall sound aesthetic that is easy to reinforce is probably the hardest, but most important thing that you can do to ensure that your band will sound great. For the most part, I know exactly how much I can do for a band’s sound before we’re done sound-check. It’s a really difficult thing to describe, but the “full, rich, thick” mix that I’m always striving for relies on the “combinability” of each of the different instrumental/vocal sounds in a band. This topic is easier to discuss with a full sound system to demonstrate on, but it is arguably the most important factor in determining which trade-offs are the “best” choices when mixing a band. This article builds on the concepts presented in the previous article (Live Sound Explained: 5. Acoustics and Sound Reinforcement).

SPEAKERS:

“Drivers” are the functional parts of a speaker. These components convert an electrical signal into physical motion (sound waves) and push and pull against the air surrounding the speaker enclosure. In order to convert electrical power into physical movement . This is accomplished by passing an amplified electrical signal through a coil of wires which are attached to the centre of a cone. The cone itself is sealed around the edges to prevent air from rushing in and out of the speaker enclosure, as the cone pushes and pulls at it.

The enclosure itself is typically a rigid cabinet, carefully designed to minimize the kinds of resonances described in the previous article.

As you might deduce from the design of a typical speaker, there are different types of drivers. Each one is designed to accurately reproduce a specific range of frequencies. Although some speakers use many different drivers, for the sake of simplicity, this article will focus on the typical two-way design which consists of one tweeter and one woofer. Tweeters are both small and rigid with a short range of motion; this makes them well suited to produce high frequency sounds. Because high frequency sound is very directional, they usually have a specially designed “horn” which helps to disperse the sound evenly throughout a wide area. Woofers, on the other hand, are large cones with a much greater range of motion. While directionality is less of a problem for low frequency sounds, these waves are much larger than high frequency waves. In order to produce them efficiently, woofers must have a large surface area and a long range of motion so as to displace the greatest possible volume of air.

The crossover point is the frequency at which the complete sound signal is split into smaller bands which are optimally suited for each driver. The sounds produced by all of the drivers in a speaker recombine acoustically, and ideally, reproduce the complete signal in the air. A typical crossover point for a simple two-way speaker is about 3000Hz. This roughly corresponds to the F# three (and a half) octaves above “middle C”. Needless to say, tweeters typically produce very high frequencies. Sounds above that frequency are, for the most part, just overtones of sounds whose fundamental is produced by the woofer. As such, tweeters play an important role in accurately reproducing the tone of a sound. The low frequencies produced by the woofer require much more power than those sent to the tweeter. Although a considerable amount of power is dedicated to amplifying low frequencies, woofers are prone to a special kind of distortion when they are overdriven with several similar signals:

Impedance is a strange, but very important behaviour to be aware of. A well engineered driver will have a fairly consistent resistance to all of the frequencies within the range that it was designed to handle. In practice, however, it is impossible to realize this ideal at all times. Depending on how the cone is currently moving, it will induce a counter-current which is subject to the cone’s inertia, its natural resonances (including those of the speaker cabinet), and the physical constraints on its throw (the seal around the cone constricts its range of motion). The counter-current induced while a driver is receiving a signal creates a variable resistance for each frequency present in the driving signal. Although this effect can manifest in a variety of ways, there is one behaviour which is particularly important to consider.

In practice, this varying, frequency-specific driver impedance makes it difficult or even impossible to effectively amplify two distinct signals which contain similar ranges of frequencies. Consider two signals, each of whose tone depends on the presence of a particular harmonic. An amplified mix containing both signals will reach a bottleneck once it is sent to the front of house speakers; the impedance of that common frequency will increase as the signal gets louder and will effectively compress just that frequency within the complete mix. When this happens, the perceived tone of each signal is altered, and neither one will sound perfectly clear. To make matters worse, this effect can even blur similar frequencies together, which can make it difficult to distinguish between the separate sounds in a mix.

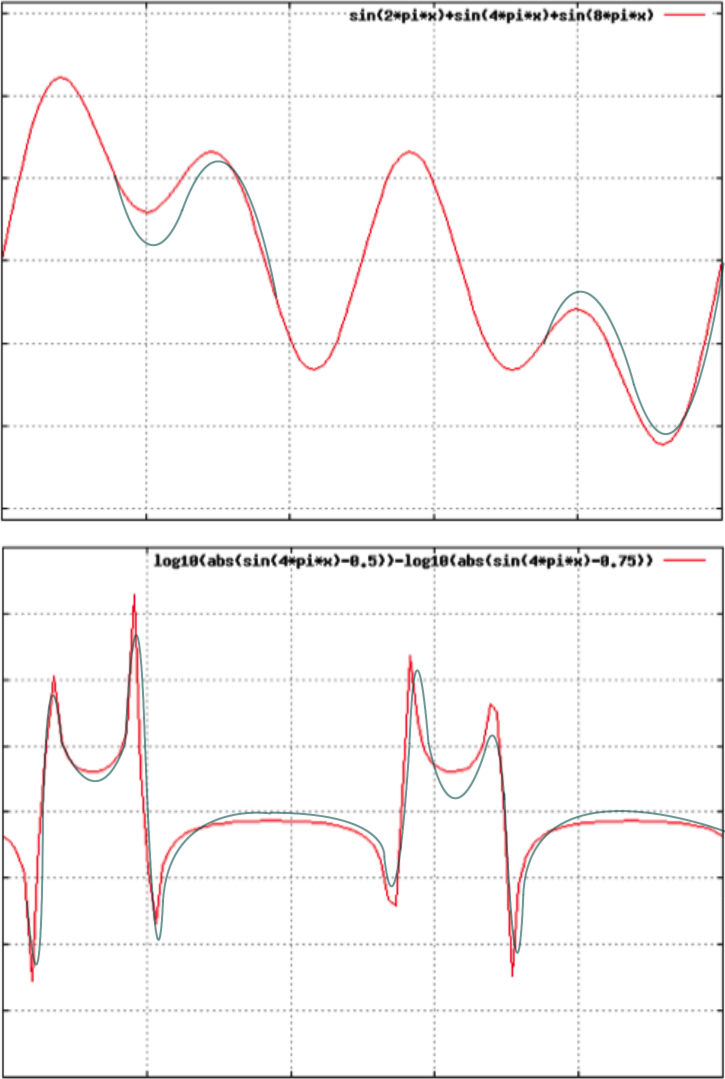

The real-world effect of driver impedance is most easily shown with (substantially) exaggerated illustrations like these.

The first diagram illustrates a relatively simple amplified signal in red. This represents the electrical signal sent to a driver from the amplifier. The blue lines which depart from this input signal represent how a driver might physically respond to this signal. Because the driver is pulled toward its resting point (near the middle of the wave’s range) it might not be able to change direction to reproduce the second peak accurately. This will harmonically alter (distort) the resulting sound. Furthermore, because the driver itself has mass, it will act under the influence of its inertia. Once the driver starts moving in one direction, it becomes more difficult to change the direction motion.

The second diagram shows a more complex sound, illustrating how a driver’s inertia might cause it to lag a little bit behind the amplified signal. This creates a slight inaccuracy in the frequency of some of the sounds reproduced by the driver. This effect, however does not change every frequency in the signal identically – it may affect each component frequency in a sound differently, depending on how that particular frequency relates to the complete signal. Extrapolating from this simplified illustration, you can imagine how this effect might make several similar frequencies in a mix seem to blur together. The slight variations in a driver’s response to similar frequencies can cause them to overlap at times, making them difficult to distinguish from each other.

Furthermore, anywhere that the driver’s motion is different from the amplified signal, it will induce a counter-current in the circuit, which is how this varying electrical resistance (impedance) at the driver is generated. To put these illustrations in perspective, the combination of waveforms in a typical front of house mix is likely to be far more complex that either of these diagrams. In fact, the second diagram is of comparable complexity to the waveform of just a *single note* on a distorted guitar.

Although there are other factors which play a role in this effect, most notably the amplifiers’ power handling, this is a good way to visualize the most substantial problem with live sound reinforcement. This effect is the main reason that a bad mix is hard to understand; it is the relationship between overtones that permits us to identify separate sounds in the mix, and even having just a few harmonics ”out-of-whack” can impede our ability to distinguish individual instruments or understand what a voice is saying. The tone of every sound must be sculpted to sound distinct in the mix before it can be effectively amplified by a sound system. On any given night, the band whose sound requires the least amount of sculpting will always sound louder, tighter, and clearer than any of the other bands that will be performing on the same stage.

I’m not aware of a formal term to describe this behaviour, but for the purpose of this article I will refer to it as saturation. For example, if a mix contains many different sounds, all of which depend on the presence of a similar range of frequencies, the mix is saturated with that range of frequencies.

Tech talk aside, these issues are best addressed with common sense: ideally you want to avoid having different instruments whose respective tones will interfere with each other in the front of house mix. This is especially important with respect to vocals. Unlike amplified instruments or even loud acoustic instruments like a drum kit, the singer’s voice relies solely upon the PA system to be heard. If you recall from the previous article, the intelligibility of the human voice depends on accurately reproducing the unique combination of harmonics that make up each vowel sound. Thus, the voice must be given priority in the critical range of frequencies that allow it to be understood. For these reasons, the voice uses more of a PA system’s resources than any other instrument.

Understanding this limitation, the most logical way to optimize a band’s sound for live sound reinforcement should begin by accommodating the singer’s voice. The easiest way to do this is to play a few “acoustic duets” in rehearsal. Select a song that is representative of most of your band’s repertoire, and have each member of the band accompany the singer one at a time (use the amplified instrument, but the singer should be acoustic – no microphone), while the rest of the band listens. As the song progresses, have another member adjust the EQ knobs on the accompanist’s amplifier. It may also be worthwhile to test other tone-related settings including: the input gain on the amp, and the graphic EQ and/or distortion pedals (if applicable). Try to establish which of these settings make the singer’s voice difficult to understand. Obviously, each player will have a preference for a certain sound, however, this consideration must be balanced with the overall sound of the band. The “acoustic” duet method is the easiest way to discover where this kind of conflicts are most prominent. Once each instrument has been “tuned” to compliment the singer, the same method can be applied between each pair of individual instruments.

Pay special attention to pairs of instruments which possess a similar sound, like lead and rhythm guitars. The lead channel on guitar amps should stand out because of a difference in tone (with the rest of the band), rather than a volume boost. Relying on a large volume boost for instrumental solos can throw a wrench into an otherwise good mix; by suddenly changing the volume of an amplified instrument, it can bleed into other microphones on stage (drums, vocals, and other guitars), requiring extra attention from a sound tech to adjust many different channels every time there is a solo. This can require so much (unnecessary) work from a tech, that (s)he may give up on “fader jockeying” altogether, leaving you with “set and forget” mix that sounds okay regardless of what’s going on. On the other hand, a penetrating tone hardly requires a volume boost at all. The ideal solo channel tone should be set up to fill in the frequency range that the singer’s voice would normally occupy during a verse. Using the method described above, this range of frequencies can be used to bring just about anything into the sonic spotlight. Any half-decent sound technician will be trying to organize your mix like this anyway – if your band sounds like this without substantial tweaking, there will be a lot more power available to make magic happen.

Speaking from experience, only about 1 in 20 independent bands actually sound like this, and it is *really addictive* working on this kind of mix. When everything just seems to fall into place without any effort, the technician will have a rare opportunity to use the mixing techniques that usually only come out for major-label touring acts (who have dedicated guitar and drum technicians responsible for setting up gear to sound like this).

MICROPHONES:

Diaphragms are to microphones what drivers are to speakers. These components are the mechanism that interfaces between sound waves and the electrical signals used in a PA system. In order to detect sounds as accurately as possible, they are basically a very light “driver” which, instead of converting an electrical signal into sound waves, induces a current in a wire based on the motion of the surrounding air. Like drivers, these are also susceptible to some peculiar behaviours which can limit their usefulness.

One such phenomena is the issue of plosives. The formation of certain consonants (puh, ta, ba, for example) causes a pulse of air to be emmitted from the mouth. When this pulse is created in close proximity to a microphone diaphragm, it creates a sudden surge in the resulting electrical signal, which is eventually sent to the front of house mix. Because vocals are amplified so heavily compared to other instruments, these artifacts can be very prominent in the FOH mix. These surges are generally a low frequency sound with a large amplitude and they can cause the sound system’s woofers to displace substantially. Because of the resulting increase in driver impedance, a strong plosive can momentarily cover up everything else in the mix. Furthermore, if there are dynamics processors (compressors) in the signal chain (and there almost certainly are), these sudden impulses can cause these processors to unnecessarily clamp down on the signal after the artifact, which can effectively obscure the “offending” signal, or even the entire mix for a moment after the plosive occurred.

Most microphones have a foam pop-screen surrounding the microphone diaphragm to suppress these artifacts, however, there are many cases where this is not 100% effective. If you use a vocal microphone on stage (rappers especially), be aware that you can cause serious mix issues if you enunciate these consonant sounds excessively. If this kind of sound is necessary for artistic reasons, angle the microphone at 45° to your mouth – this takes the edge off the plosives without substantially sacrificing sound quality (the benefits outweigh the costs, at least). Be particularly conscious of this if you are a background vocalist. If one voice is causing this kind of problem with the front of house mix, any rational sound tech will simply turn it down until it no longer presents an issue in the big-picture mix.

The previous article gave a brief description of feedback. From howls to shrieks to rings to rumbles, there are a variety of specific conditions that can cause this phenomenon. Extending upon this explanation, there are two common types of feedback that are easy to avoid. By understanding specifically how these occur, it is possible to reduce the likelihood of this issue.

There is a lot of stigma about “cupping” microphones; according to conventional wisdom, sound technicians unanimously loathe cupped microphones. For anyone unfamiliar with the term, it refers to the practice of wrapping a hand around the bulbous pop screen that covers the microphone diaphragm. From a technical perspective, this has an interesting effect on vocals. Instead of relying on just the resonant cavities normally used to produce speech (oral and nasal), the cupped microphone introduces a new cavity through which the voice is filtered. Like any resonant space, this introduces a number of acoustic phenomena, with very predictable results. First off, the good: cupping a microphone alters the tone of the voice in a way that makes it seem louder in a mix. It accentuates the mid range frequencies which often seem to get buried by distorted guitars and snare drums (among other things). It can also effectively distort the voice, which can be a useful effect to contrast more natural vocal sounds.

Now the disclaimer: by changing the tone of the voice, cupping makes the words harder to understand. As I mentioned earlier, vocals require an enormous amount of power to amplify effectively, even when they are coming from a good, clean signal. By applying an unnatural acoustic filter to the voice, there are certain tone corrections which become necessary to make the words as intelligible as possible. This doesn’t reverse the distortion – it’s a one way street once you make that turn, but with some careful equalization it is possible to increase the intelligibility of the words. Unfortunately this adjustment comes at a great cost to power efficiency. This additional frequency boost required to create an acceptable, intelligible signal also creates a greater likelihood of feedback issues. Finally, cupping will negate, to a large degree, the protection that the integrated pop screen offers against plosives. Creating a tube between your mouth and the diaphragm basically directs every little burst of air directly at the diaphragm.

I spent most of my tech days working for a venue with a hollow stage. This is a horrible construction practice in acoustic applications, but it gave me lots of opportunity to familiarize myself with the associated low-frequency rumbles. This effect is not unique to hollow stages; it occurs all the time to at least some extent, however it tends to be more pronounced in locations where low frequencies are more resonant. With respect to the front of house mix, these rumbles behave similarly to plosives. Because low frequency waves are so large, they have a tendency to disproportionately increase driver impedance, which can temporarily reduce the volume of the rest of the mix.

These rumbles are caused by a number of factors. In combination, they all contribute to a single composite effect. These factors include: the standing wave resonances mentioned above, a related acoustic effect which causes bass frequencies to be more prominent near walls (and especially corners), and also the effect of low frequency sound waves which travel through walls and floors.

Because the air immediately next to walls, floors, and ceilings is immobile, these locations give rise to nodes in the sound waves, that is, these are the places where pressure waves displace (move) the least amount of air. Conversely, the air located exactly one half of a frequency’s wavelength away from the wall will be an antinode. This location will exhibit the greatest possible displacement of air for that particular frequency. For most audible frequencies, this distance is so small that it has no noticeable effect. However, a frequency (and its related harmonic series) whose half-wavelength is equal to the distance from a stationary microphone to a wall (or floor, or ceiling) tends to be pronounced at the mic’s position. Given that there are usually many different microphones around the stage, the composite effect is to make low frequencies more prone to muddiness.

Furthermore, low frequency waves can also travel through solid objects (imagine a hollow plywood stage resonating like the skin on a giant floor tom…). These vibrations can travel through microphone stands, and physically shake the diaphragm of the mic. There are other, less significant, contributors to this issue but it all manifests similarly. This problem will be addressed more thoroughly in the following sections of this series which will deal with each specific instrument.

THE HUMAN EAR:

The final bottleneck your music encounters (on it’s way to rocking the crowd) relates to how our ears interface with auditory stimuli and how this information is interpreted by the brain. There has been a lot of recent inquiry into this subject and there is a wealth of information available to the interested reader. I highly recommend Dr. Daniel J. Levitin’s This is Your Brain On Music. It is a very accessible read with many anecdotal accounts to bring these ideas to life. A few of these effects are particularly important to live sound reinforcement, but a developing an awareness of these effects will serve to the advantage of any serious musician.

First and foremost, the previous article already mentioned that we hear groups of frequencies from the same harmonic series as an individual musical note, and the tone of this note is determined by the relative volume of each harmonic overtone in that sound. As such, there is a lot of leeway in how an instrument’s tone can be sculpted without affecting a listener’s perception of the musical content. Understanding that our brains already “fill in the blanks” to identify the musical note represented by a group of mathematically related frequencies, it doesn’t take an exceptional leap of logic to guess that our brains might fill in other patterns in the sounds that we hear. The most notable such effect is our ability to process the enormous amount of different information detected by each individual ear. Because of the presence of nodes and the effects of phase cancellation, (explained in the previous article) the sounds detected at any two listening points are quite a bit different. It’s amazing to consider how effectively our brains can create the perception of such a coherent soundscape from two disparate and incomplete signals. Next time you go to a live show, try standing absolutely still and cover one of your ears. This experiment often reduces otherwise enjoyable music to completely unintelligible noise. One ear on its own does not detect enough information to make sense of what you’re hearing. Even if you just move around a little bit (with one ear still covered), your brain will begin to compensate for the presence of nodes and phase cancellation, filling in progressively more of the blanks, enabling a much better perception of the complete soundscape.

Because each listening point within a room is different depending on its relationship to sound sources (amps, monitors, front of house), resonances in the room, surfaces that reflect sounds, and the acoustic absorption of other bodies, live sound reinforcement is accomplished by presenting the best possible sound to the majority of the room. Because there is always an uneven spread of directional sounds, it is possible to over-saturate wide areas within a room with specific frequencies. If this occurs to a great extent, it can make even the “best possible” mix sound terrible from most of the listening points in a room. This is most often the result of a guitar amp that’s too loud (surprise, surprise), but it can occur anywhere within the range of any amplifier’s sound. In fact, if all of the amplifiers in a band are loud enough, they can excite the resonances in the room enough to make even a perfect mix sound so uneven that it is impossible for our brains to make sense of it – just because of the acoustic influence of the room.

Our ears detect sound with a mechanism similar to a microphone diaphragm. There is a limit to the ear-drum’s range of motion, and at very high volumes, this creates an effect similar to the behaviour previously illustrated with reference to speaker drivers. If you’re in a small room, and the amps are loud, you must be careful to leave room for the PA’s contribution to your sound. Quite simply, our hearing apparatus (ears and brain) can only make sense of auditory stimuli up to a limited volume. After that threshold is reached, any additional volume will actually make the complete mix sound worse (less intelligible).

When faced with continued high volume sounds, our brains eventually get used to the signals received by our pounding eardrums. In order to make sense of these unusually distorted stimuli, our brains adapt to make sense of these signals. Interestingly, this effect also partially corrects for ranges of frequencies that are over saturated. Essentially, our brains begin to progressively ignore any frequencies that don’t contain useful information. Unfortunately, this takes a couple of hours to kick in, and then, as much as several days for our auditory processing to revert back to normal. This change of perception is referred to as ear-fatigue. If you’ve ever gone through a phase of listening to high-volume music in headphones, you may have noticed that the music seems quieter each subsequent day, requiring you to turn it up further to get the same perceptual effect. In a live setting, this can make an entire room full of spectators increasingly unable to make sense of even a really good mix over the course of a show. Managing the audience’s ear-fatigue throughout the night is another aspect of a sound tech’s job, and another of the many legitimate reasons that you may be asked to turn an amp down.

SUMMARY:

It takes years on the job as a live sound technician, to first appreciate and then learn to exploit these effects consistently for a wide range of musical styles. The contents of this article are truly the holy-grail of live sound reinforcement. These are the considerations that, more than any other factor, create the larger-than-life aesthetic obtained by major label acts. Whether these factors are understood in terms of the underlying physics (as I have presented them here), or as rules-of-thumb (likely acquired by chatting with technicians during long hours on a tour bus), the end result is predictable, and will make any band an absolute joy to mix, whether the music is to the technician’s personal taste or not. Bands that can pull off this balancing act make everybody look like a superstar, from the booking agent, to the sound tech, to the venue, and especially, the band itself. These are the acts that get a call to open for the big-name shows when the opportunity arises.

- Adjust the tone of each instrument in the band to avoid conflicts.

- The “acoustic duet” method described earlier in this article should be used to adjust every possible pair of instruments in the band to compliment each other.

- This method can also be used to tune lead-guitar channels to occupy the same frequency range as the voice during solos. To do this, just increase the settings that tend to cover up the voice. Do not forget to switch off this channel during the verse!

- When you’re buddy-checking each amp’s volume against the drummer (as suggested in the previous article), also listen to how the room acoustics may emphasize the problem-frequencies that could conflict with vocals.

- It is far more effective (and fun for the tech) to beef-up an “incomplete” amp tone than to fight with an acoustically over-saturated room. If in doubt, use a slightly thinner tone, leaving some room for the tech to work.

- Because almost every room tends to exaggerate low-frequency rumbles, it is better to err on the side of caution when setting up instruments in this range. It is much easier to boost the bass on several appropriate signals than it is to downplay an overriding acoustic problem that is leaking into all of the microphones on stage. When you’ve finished setting the tone on guitar/bass amps, it is always a good idea to turn down the bass a little bit lower than what may seem “just right.” Most PA systems have disproportionately loud subwoofers, so there is usually plenty of power available for a sound tech to perform the fine-tuning in this range.

- When setting up for a sound check, ask the sound technician where to place the amplifiers.

- Every room and stage is acoustically unique, and a house sound technician is likely aware of the many peculiarities that may be present. Speaking from experience, I didn’t always volunteer this information to every single band because it often led to unnecessarily long conversations during setup. These tendencies may be the result of many factors, but it’s generally a good idea to ask for and follow this advice, even if it is as trivial as moving an amp forward a couple of feet on the stage.

- As a general rule, avoid placing bass amps near walls, and especially in corners. Although this will make them seem louder, because they this location will take tend to excite room resonances, the tone will become uneven through the full range of the instrument, and it is more difficult to hear musical details when the instrument’s sound is inconsistent.

- Avoid placing low-tuned drums (bass drums and floor toms) overly close to walls. If their placement corresponds to an antinode with a similar frequency to the drum’s resonance, these drums are prone to reinforcing other low rumbles. Each individual factor contributing to this effect makes it harder to deal with.

- Use amplified vocal microphones in rehearsals, and develop habits that will avoid the issues described in this article.

- Avoid causing plosives. If some particular lyrics seem to emphasize this effect, practice angling the microphone at 45° to your mouth during the offending passage. Do not enunciate hard consonants (puh, ta, ba). Any sensible tech will turn you way down if these plosives are causing issues in the FOH mix.

- That said, do try to exaggerate the difference between various vowel sounds. This will make the vocal signal much easier to fit in the mix, and will require the least amount of power-sucking, unnatural-sounding adjustment to the tone.

- Only cup microphones if you require one of the “good” effects discussed in this article. Many singers cup because they think that it makes them louder; in fact, altering the tone of the voice makes feedback more likely to occur at an equivalent volume, and EQ adjustments (to make the altered voice sound intelligible) require more power than a signal generated using normal mic technique.

- If your band has a dedicated singer, be aware that low-frequency rumbles are transmitted through the floor, into the mic stand, and then to the microphone diaphragm. The best practice is to remove the mic from the stand in order to isolate it from these effects. I know that dancing with a mic stand looks cool, but sometimes it can make the whole mix sound like garbage.

- There are a number of factors that influence the maximum volume that a room can handle. Be realistic about how much overdrive a guitar’s tone really requires, and balance this consideration with how it interacts with the room’s acoustics. Remember that the audience will perceive a more complete sound than you may think. When a technician asks you to adjust an amp’s volume, the reasoning is often based on how well the directional sound is spreading, how the tone is interacting with other signals, how much the audience’s bodies are absorbing, how much it is leaking into other microphones, etc.

- Be quick to respond to these kind of requests, because they are often quite urgent. Asking for changes to stage volume is a last resort when an issue can’t be resolved with a PA system’s rather comprehensive controls. It’s also a sign that the tech wants to do some serious work on the mix. Try to comply as quickly as possible, because it can take even a very good tech some time to identify the root cause of a larger mix problem. No tech likes to initiate major changes after sound check, so any late requests (s)he might voice are probably important.

Return to the Table of Contents, or read on:

More @ essentialdecibels:

I hate surprises. I especially hate being surprised in ways that make my job harder. There are many requests that frequently pop up at the last minute...

I've taken counterpoint and harmony (twice) at the post-secondary level, and there is one essential concept which was never adequately explained in an...

When I began my first run at music school, it didn't take me long to realize that I was woefully underprepared. In the months before my audition, I sa...